1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

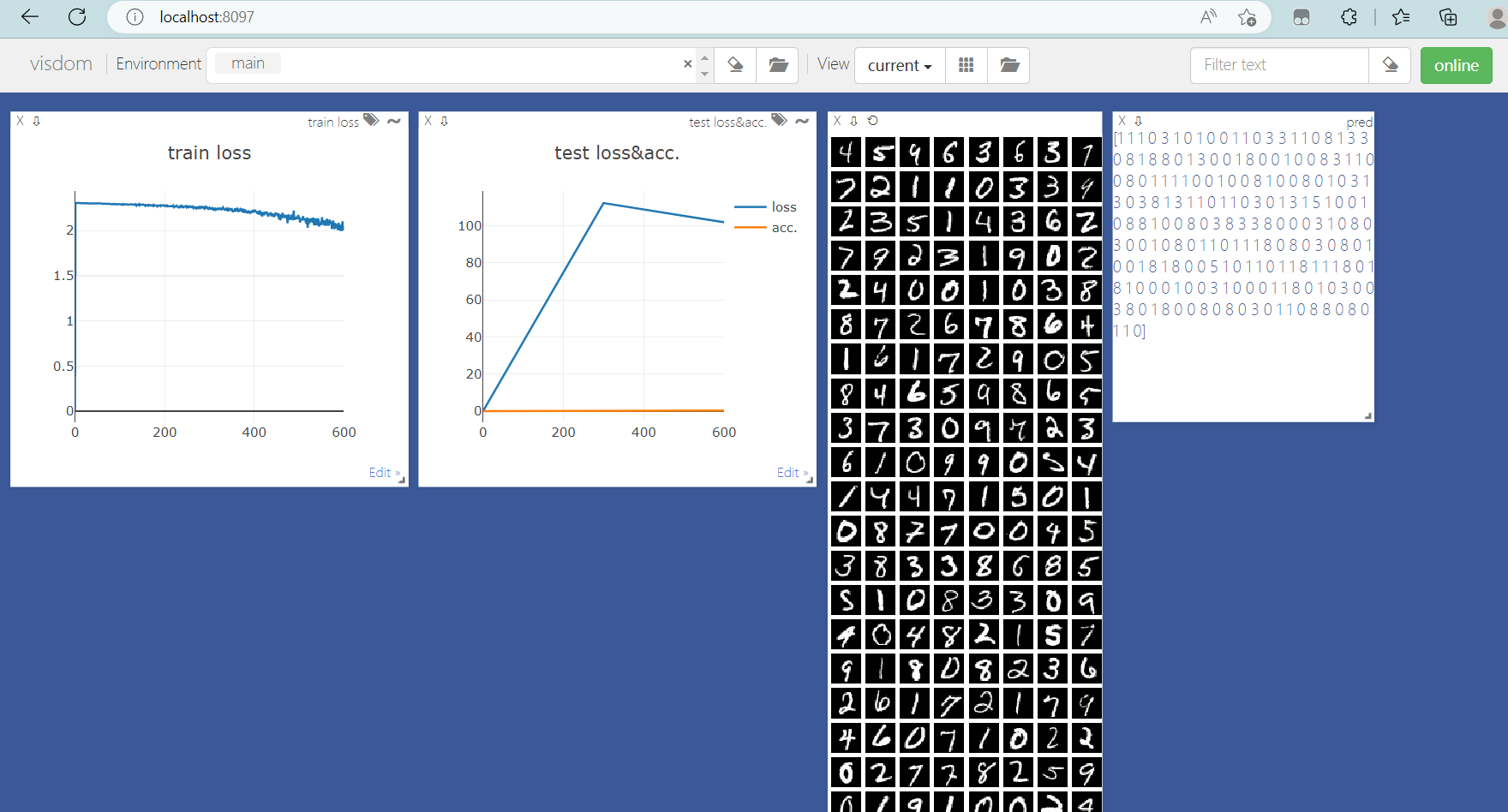

| import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

from visdom import Visdom

batch_size=200

learning_rate=0.01

epochs=2

train_loader = torch.utils.data.DataLoader(

datasets.MNIST('./mnist data/', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

])),

batch_size=batch_size, shuffle=True)

test_loader = torch.utils.data.DataLoader(

datasets.MNIST('./mnist data/', train=False, transform=transforms.Compose([

transforms.ToTensor(),

])),

batch_size=batch_size, shuffle=True)

class MLP(nn.Module):

def __init__(self):

super(MLP, self).__init__()

self.model = nn.Sequential(

nn.Linear(784, 200),

nn.LeakyReLU(inplace=True),

nn.Linear(200, 200),

nn.LeakyReLU(inplace=True),

nn.Linear(200, 10),

nn.LeakyReLU(inplace=True),

)

def forward(self, x):

x = self.model(x)

return x

device = torch.device('cuda:0')

net = MLP().to(device)

optimizer = optim.SGD(net.parameters(), lr=learning_rate)

criteon = nn.CrossEntropyLoss().to(device)

viz = Visdom()

viz.line([0.], [0.], win='train_loss', opts=dict(title='train loss'))

viz.line([[0.0, 0.0]], [0.], win='test', opts=dict(title='test loss&acc.',

legend=['loss', 'acc.']))

global_step = 0

for epoch in range(epochs):

for batch_idx, (data, target) in enumerate(train_loader):

data = data.view(-1, 28*28)

data, target = data.to(device), target.to(device)

logits = net(data)

loss = criteon(logits, target)

optimizer.zero_grad()

loss.backward()

optimizer.step()

global_step += 1

viz.line([loss.item()], [global_step], win='train_loss', update='append')

if batch_idx % 100 == 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

test_loss = 0

correct = 0

for data, target in test_loader:

data = data.view(-1, 28 * 28)

data, target = data.to(device), target.cuda()

logits = net(data)

test_loss += criteon(logits, target).item()

pred = logits.argmax(dim=1)

correct += pred.eq(target).float().sum().item()

viz.line([[test_loss, correct / len(test_loader.dataset)]],

[global_step], win='test', update='append')

viz.images(data.view(-1, 1, 28, 28), win='x')

viz.text(str(pred.detach().cpu().numpy()), win='pred',

opts=dict(title='pred'))

test_loss /= len(test_loader.dataset)

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

|